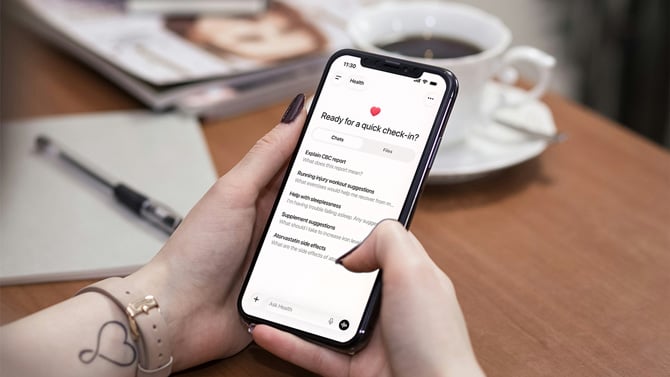

OpenAI has launched ChatGPT Health, a dedicated space within ChatGPT that connects to your medical records and wellness apps for personalized health advice. The feature is rolling out to ChatGPT Free, Go, Plus, and Pro users outside of Europe and the UK, and you have to join a waitlist.

OpenAI says it built this separate experience, with enhanced privacy protections, due to demand. Over 230 million people ask health questions on ChatGPT every week, it says. But the company's track record on privacy, ongoing lawsuits over harmful advice, and the fundamental issue of AI hallucinations mean you should think twice before trusting it with your health.

ChatGPT Health lets you link your medical records through partnerships with U.S. healthcare providers via b.well Connected Health, the biggest U.S. health data network. It also connects with wellness apps like Apple Health, Function, MyFitnessPal, and Weight Watchers. The feature is designed to help you understand lab results, prepare questions for doctor appointments, get nutrition advice, and more. OpenAI says it has worked with more than 260 physicians across 60 countries who provided feedback on how ChatGPT answered queries over 600,000 times to shape how the system responds.

But OpenAI's track record with faulty information, bad advice, and privacy breaches gives reason for concern.

OpenAI claims additional safety measures

OpenAI says conversations in ChatGPT Health are stored separately from regular chats, use encryption, and are not used to train its models. The company also says it has evaluated the model using HealthBench – a benchmark it created to measure safety, clarity, and appropriate escalation of care (knowing when you need to see a medical professional).

OpenAI noted that it designed ChatGPT Health to support, not replace, medical care, and that it is not intended for diagnosis or treatment. The physician collaboration and separate safety protections are the key improvements over regular ChatGPT, the company explains.

Privacy breaches and lawsuits raise concerns

OpenAI is currently embroiled in legal battles over user safety and mental health, not to mention security incidents that exposed sensitive user data.

In July 2025, FastCompany reported that Google and other search engines were indexing shared ChatGPT conversations due to an unclear privacy toggle, potentially exposing personal information, including medical information. In a March 2023 incident, a bug in ChatGPT's conversation history feature leaked user chat data and exposed payment information, prompting Italy to temporarily ban ChatGPT over data protection concerns.

OpenAI is also facing multiple lawsuits alleging that ChatGPT provided harmful advice that contributed to deaths. In August 2025, the parents of 16-year-old Adam Raine sued OpenAI, claiming ChatGPT encouraged their son's suicidal ideation before he took his life in April 2025. OpenAI's own moderation system reportedly flagged 377 of Adam's messages for self-harm content, yet no safety mechanism intervened.

In November 2025, seven additional lawsuits were filed in California. Four of these lawsuits claim that ChatGPT played a role in suicides, while three claim it reinforced harmful delusions.

When the company is facing significant trouble with its health and wellness advice, launching a health feature may leave users wondering whether it is equipped to protect them.

Read More:Almost Two-Thirds of Teens Are Using AI Chatbots. ChatGPT is Winning

Hallucinations are still an unsolved problem

Hallucinating (i.e., making stuff up) remains a fundamental issue with the large language models that power chatbots, because they tend to predict likely responses rather than provide correct ones. Modern AI chatbots are much better at getting facts right than they were a couple of years ago, thanks to being able to look things up and "think" through problems more carefully. But they still can't tell when they don't know something, which means you always need to double-check important information.

CEO Sam Altman acknowledged on the OpenAI podcast in mid-2025 that, "People have a very high degree of trust in ChatGPT, which is interesting, because AI hallucinates. It should be the tech that you don't trust that much." That’s a serious risk when you’re using ChatGPT or other bots for medical decisions. ChatGPT Health may have additional safeguards, but given how sensitive health information is, I’m not yet convinced that you should use ChatGPT for any health advice.

If you need help understanding lab results or are preparing questions for a doctor’s appointment, ChatGPT Health might provide starting points. However, I would recommend that you don’t use it beyond that and verify everything with a healthcare provider. For mental health or serious medical concerns, you're better off avoiding talking to AI entirely.

[Image credits: OpenAI, phone mockup via Canva]