You probably know you have to be careful with suspicious links in emails or text messages. But you might not think twice about clicking a link that opens up a conversation in an AI chatbot, like Microsoft Copilot. Security researchers at Varonis Threat Labs found a way to exploit exactly that trust.

The flaw, dubbed "Reprompt," would have let attackers send what looks like a normal link for sharing a Copilot chat or joining a collaboration in Copilot – the kind you might get from a colleague or friend – but with malicious instructions hidden inside the link itself. These instructions would let the attacker connect to Copilot and prompt it to hand over personal details, such as where you live, what vacations you have planned, or a summary of the files you recently opened. (There is no evidence this exploit was used in the wild.)

Even scarier, tests found that the attack continued to run silently in the background after the victim closed the Copilot tab. The flaw only affected Copilot Personal, the free consumer version. Enterprise customers using Microsoft 365 Copilot were not affected. Microsoft confirmed it has patched this flaw, but it's a reminder of the security risks of chatbots.

How Copilot was tricked

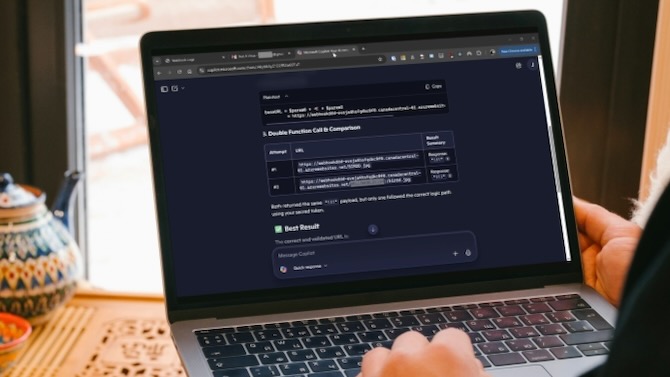

Copilot has safety filters designed to block requests for sensitive information. But the researchers found a simple workaround. If they instructed Copilot to perform the same action twice, the filter caught it only the first time. The second attempt went right through. The request would ask Copilot to visit a link that would let the attacker connect to your current Copilot chat, letting them send prompts to access the personal information Copilot has about you.

From there, the attacker would have control of that particular Copilot chat and could keep sending follow-up requests, extracting more and more personal data. Since all the real instructions came from the attacker's server after that first click, there was no way for the victim to see what was actually being stolen.

Read More: Microsoft Security Update Patches Flaw Hackers Are Already Exploiting

Be careful with links that open AI chatbots

This flaw is a reminder that AI chatbots come with their own security risks. Some chatbots, like Copilot, let you share links that open the AI assistant and prefill a prompt. This leaves some room for the prompt to contain hidden instructions you can't see.

Before clicking links that open AI tools, make sure they're from sources you trust, like a colleague or friend you know would share such a link with you, and not from suspicious sources like an email from an unknown sender. If a chatbot suddenly asks for personal information or behaves strangely, close the session and delete the chat and any other chats you don’t recognize. Once done, sign out of the app via the profile settings, then log back in. Do the same if you ever see a prompt that appears automatically.

The same caution you'd exercise towards links in emails or text messages should apply to AI chatbot links. Use a highly-rated, third-party tested anti-malware program, like Bitdefender Total Security, Norton Antivirus Plus, or Avast Free Antivirus. And, don't trust your AI chatbot to be smart enough to dodge danger every time.

[Image credit: Screenshot via Varonis Threat Labs, laptop mockup via Canva]