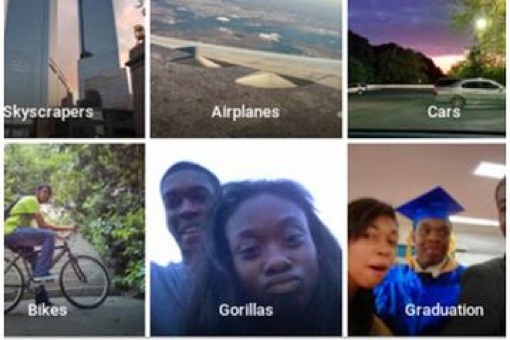

Internet search giant Google has found itself mired in controversy this week after its Google Photos algorithm tagged two black people as “gorillas.” The offensive tag was discovered earlier this week by Twitter user Jacky Alciné (@jackyalcine), who screenshotted Google Photos’ treatment of his friend.

Internet search giant Google has found itself mired in controversy this week after its Google Photos algorithm tagged two black people as “gorillas.” The offensive tag was discovered earlier this week by Twitter user Jacky Alciné (@jackyalcine), who screenshotted Google Photos’ treatment of his friend.

“Google Photos, y’all f***ed up,” Alciné wrote on Twitter. “My friend’s not a gorilla.”

The comment quickly made its way to Google management, who issued an apology over what it called an imperfect algorithm. “We’re appalled and genuinely sorry that this happened,” a Google spokesperson said. “There is still clearly a lot of work to do with automatic image labeling, and we’re looking at how we can prevent these types of mistakes from happening in the future.”

Specifically, the company says it is working to improve how its software analyzes skin tones. It has also temporarily disabled the “gorillas” category to prevent the incident from happening again.

The Wall Street Journal notes that while Google has been reasonably good at stopping offensive content from reaching the public, occasionally the company stumbles. Earlier this year, the company got bad PR over its YouTube Kids filters, which failed to block some inappropriate content. With this type of technology, Google notes, it is “nearly impossible to have 100% accuracy.”

Artificial intelligence expert Babak Hodjat, chief scientist at Sentient Technologies, agrees. “We need to fundamentally change machine learning systems to feed in more context so they can understand cultural sensitivities that are important to humans,” he said. “Humans are very sensitive and zoom in on certain differences that are important to us culturally. Machines cannot do that. They can’t zoom in and understand this type of context.”

[Image credit: Jacky Alciné]