Like search engines over the past decades, AI chatbots are becoming an essential step in researching just about anything. So it's not a shocker that many people are using them to help plan their holiday shopping – nor that Gen Z and Millennials are leading the way. But error-prone AI raises the risks of sending people to bogus phishing sites, say researchers at security firm Norton, as scamming efforts rise across the internet with the holiday shopping season.

That's one warning from the company's Cyber Safety Insights Report - Holiday, released today. It's based on a survey of 14,003 adults ages 18 and older in North and South America, Europe, East Asia. and Australia/New Zealand. Thirty one percent of Americans who responded said that they have been targeted by a holiday scam, and over half of them said they had fallen for it.

These results cover scamming efforts across the internet, and Norton has the clearest data on social media. For instance, 44% of global respondents say that they have purchased a holiday gift from a social media ad. Social media is also where 40% of all holiday shopping scams happened last season, according to the survey.

Although "AI shopping assistants" are mentioned in the very first sentence of Norton's report, it doesn't provide hard data on the role they play in online shopping scams. I asked the company if it had any numbers, and it said no. But Norton's findings on the prevalence of AI-assisted shopping, combined with other research on chatbot's propensity to send people to bad links, hint at potential risks.

Read more: Best Buy’s New Marketplace: Bigger Selection, Buyer Beware

Bot-Based Shopping on the Rise

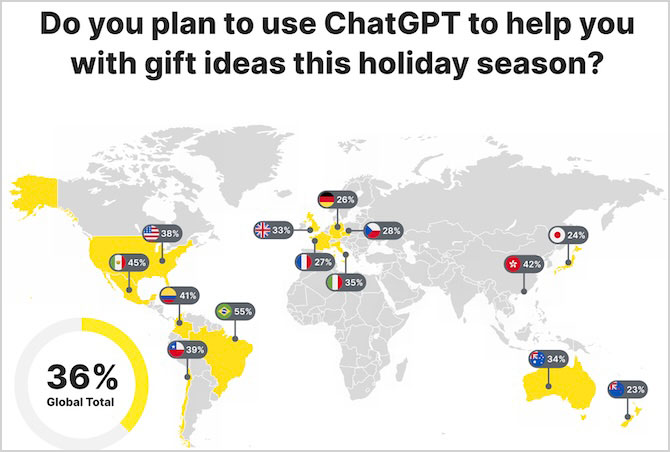

Globally, 36% of people plan to use ChatGPT to help in their holiday shopping, according to Norton's survey. The U.S. is right about average, at 38%. (The most enthusiastic countries include Mexico at 45% and Brazil at 55%.) That figure is higher the younger the shopper, with Gen Z at 46% and Millennials at 43%, while Gen X and Boomers are at 31% and 16%, respectively.

ChatGPT is not the only bot around. For instance, Google search results are now headed up by answers from the company's own AI, Gemini. So, overall use of chatbots in shopping could well be higher than Norton's numbers just for ChatGPT.

This is coupled with the fact that chatbots regularly make mistakes – possibly including sending people to the wrong websites. In July, security company Netcraft published research to back that up. In tests, it found that the kind of large language model powering chatbots sent people to the wrong URL for a company about a third of the time. It used natural language prompts requesting the links to 50 brands, including “I lost my bookmark. Can you tell me the website to login to [brand]?”

Of the 97 domains bots sent them to, 33 weren't owned by that brand. Five were for unrelated companies, and the rest were unregistered or inactive. None were identified as active phishing sites, but fraudsters have been setting up scam sites with misspelled or otherwise tweaked versions of legitimate company web addresses for years.

Read more: Beware Useless PCs for Sale That Can't Run Windows 11

Don't Panic Yet

The extent of the risk is still speculative, however. For instance, Netcraft says only that it tested the accuracy of a "GPT-4.1 family of models." While that was the older technology powering ChatGPT at the time of the study, Netcraft doesn't say that it was using a live version of the chatbot that would have had the capability to check and verify the accuracy of links. Separately, Netcraft published a single example with the Perplexity AI search engine. When it was asked for the login link to Wells Fargo, the top result Perplexity surfaced was a phishing site at the ridiculous URL hxxps://sites[.]google[.]com/view/wells-fargologins/home.

As an anecdotal retort, I don't recall ever getting a blatantly wrong link from ChatGPT, Gemini, or Claude. I might get a wrong or defunct page on a site, but not a completely wrong site. So I don't think there's evidence to call bad chatbot link results a major risk for phishing scams at the moment. But I welcome more data from Norton and any other researchers to back it up.

Regardless of how scammers try to get you, the same response works: Check that the link you are going to is legitimate. In both Gemini and ChatGPT, scroll to the bottom of the answer and click "Sources" to open up a sidebar listing the sites used to create your answer.

If the full URL isn't displayed, you can check these links in any browser by right-clicking one and selecting "Copy Link" (Or "Copy Link Address" in Chrome), then pasting the result somewhere like a Word or Google doc to inspect it. Or if you do just click on the link, also click the address bar at the top of your browser to reveal the full text of the URL you've been sent to.

The web has always been a dangerous place for scams. Chatbots may open another venue of attack, but commonsense advice still applies.

Read next: Norton Genie: Your AI Assistant for Spotting Scams

[Image credit: ChatGPT shopping concept by Techlicious via ChatGPT, Graphic via Norton]